Three Security Observations

1.) In the technology world, the strength of security is often quoted quantitatively in terms of combinatorial complexity (e.g. an 8-character password with all normal keys available has a combinatorial complexity of 94^8), and qualitatively as if there is only one attack vector and the context for that attack vector is full access over the technology in question, with no significant time constraints, by a malicious actor.

That attack vector is more or less equivalent to scientists in a laboratory trying to attack some piece of technology you have.

That’s pretty much the worst case scenario, and so it makes sense to judge security by this standard prominently. But the world isn’t perfect – not even for malicious actors. There are many other attack scenarios that don’t resemble this full-control-with-no-time-constraints setup.

What seems to be missing in security analysis are metrics that are relevant to many other imperfect attack vectors that are liable to exist.

One metric, for example, might be coined time-pressure-complexity, and it would measure how long it would take an intruder to attack some piece of technology if the intruder also wanted to not remove the technology from its current setting.

And imagining a similar setup, another metric might be how much dexterity-complexity an intruder would face when attacking a piece of technology. This metric is not specific to passwords, but just as a simple example, let’s say one created a very long password that was easy for the owner to remember and type, but that would just naturally take longer for an intruder to enter. We can take that further and imagine the password not utilizing common typing patterns (e.g. languages) the intruder is used to. Even if the intruder knew the password letter for letter, he might fail to enter it correctly when under time pressure, which could lead to panic and a further degradation in that entire attack vector.

In a panic situation you tend to lose your fine motor skills, and a high dexterity-complexity would attempt to attack that weakness.

Although these are secondary metrics to consider, they’re still extremely relevant to the real world, and so it would make sense to have a proper accounting of them in many types of security analysis.

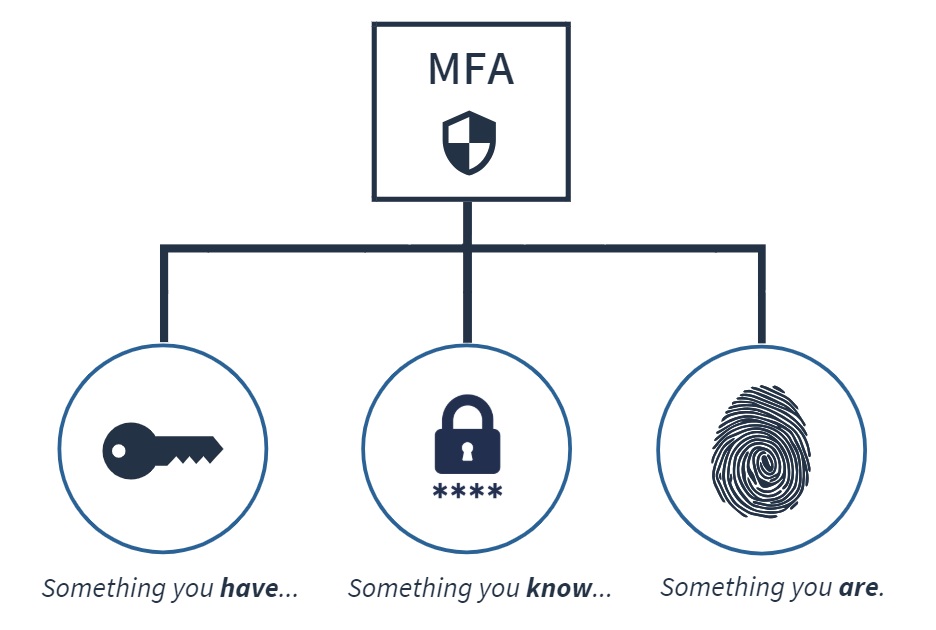

2.) Security analysis for multi-factor authentication often describes three separate notions: something you know, something you have, and something you are.

But I believe there’s actually slightly more to this security space than what the current model is describing.

I believe there’s another distinct notion: something you inherently own

Perhaps it would be a subset of something you have.

The difference is that if a malicious actor takes your metal key (something you have), it is now something that the malicious actor has.

But, for example, if a malicious actor steals a phone with a SIM card tied to your phone number (perhaps utilized for 2FA), you will, generally speaking, have the ability to get that SIM card invalidated by the carrier and have your phone number re-associated with a new SIM card.

To a significant degree, you inherently own that phone number – it’s not just something you happen to have.

Although that was an overly simple example, it demonstrates an additional security notion and a meaningful difference between it and its closest relative.

3.) Major mainstream technology providers (e.g. email providers) offer a sizable amount of security options, ranging from rustic passwords to biometric technology. But I believe the not-so-state-of-the-art communication space therein is serious cause for concern.

Currently, the consumer/user is not provided clear guidance on what’s going on with their security options.

There are generally three different times an email provider will bring those extra security options (e.g. phone text message, biometric) into play:

- MFA login

- account recovery (can’t log in to your account)

- trying to change security settings for the account

The technology providers allow the user to define their additional security options, but they do not provide any guidance at all regarding what will happen in each of those three different scenarios, and if I’m not mistaken, there are differences.

What will specifically happen in each scenario should be communicated clearly to the user, but currently the system is unclear, ambiguous, confusing, and opaque; you set up your security options and then find out later exactly how they’re employed in each individual scenario.

In my opinion, three visual diagrams should be shown to the user – side-by-side if possible – depicting what will occur.

Knowing how those options are actually employed in those three different scenarios can definitely affect the user’s decision making, and it’s just generally a good practice. But unfortunately the providers are not living up to their affirmed security and corporate responsibility principles when it comes to this.

As a final quiz, imagine a user who has set up a mainstream email account (of your choosing) with a password and a garden-variety authenticator app. The user forgets the password, and also, his phone is completely destroyed. (In this example, it sure is especially clear the authenticator app is something he had, not something he inherently owned.) How do those three security scenarios play out? Don’t know, do you? But shouldn’t you? Additionally, if a phone number was also required to set up the account, perhaps to save the user in this situation, would that or would that not contradict the theory that authenticator apps are superior to text messages for account security? Whatever your answer is, it should serve to further articulate the distinction between something you have and something you inherently own.